Multi -modal output opens new possibilities

Being real multi -modal output opens up interesting new possibilities in chat boats. For example, Gemini 2.0 Flash Interactive graphical games can play or produce stories with constant reflection, maintains the role and sets continuity in multiple images. This is far from perfect, but the constant temperament of the role in AI’s assistants is a new potential. We tested it and it was very wild – especially when it created a picture provided from another angle.

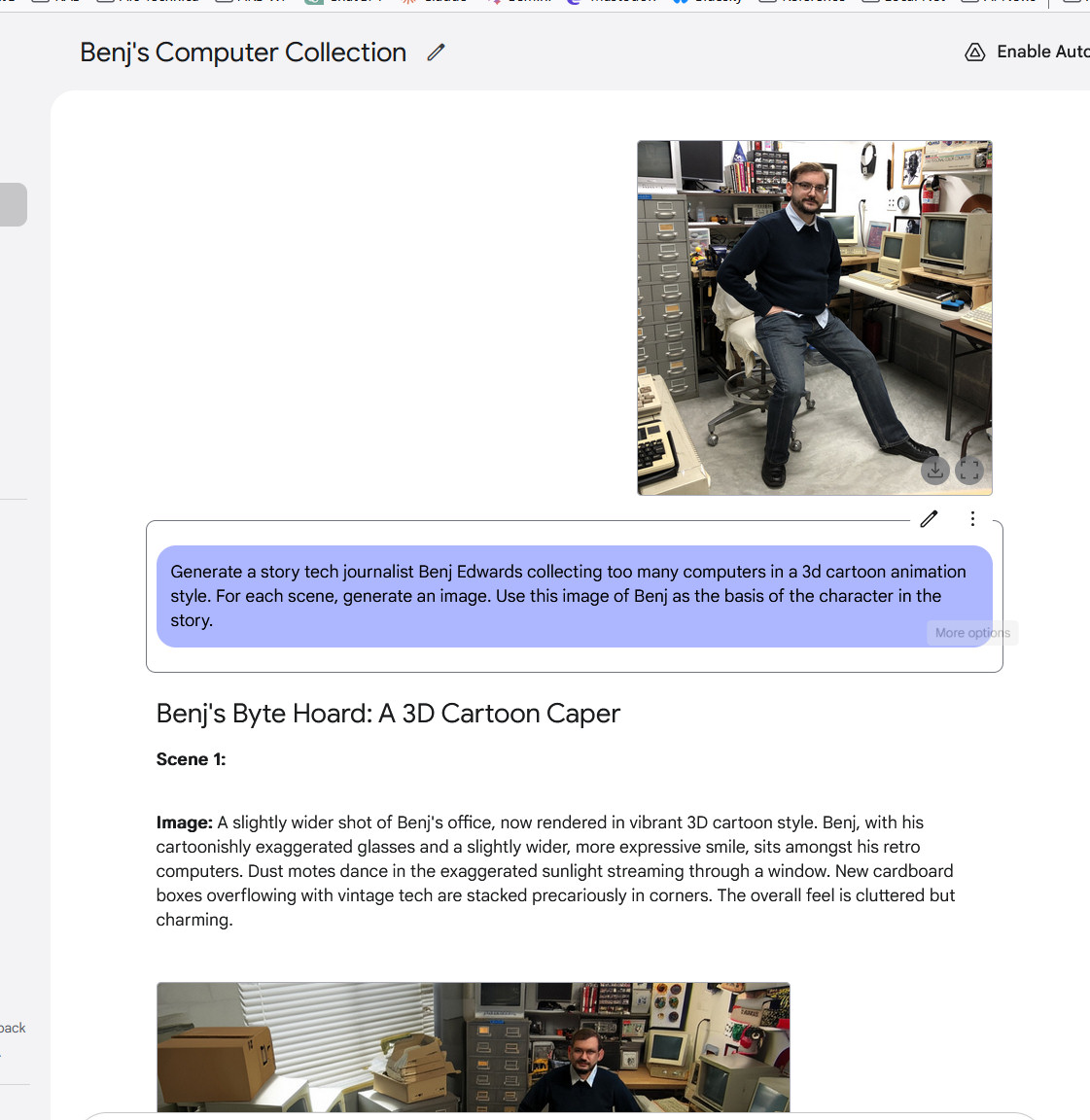

Making multi -image story with Gemini 2.0 Flash, Part 1.

Google / Bennge AdWords

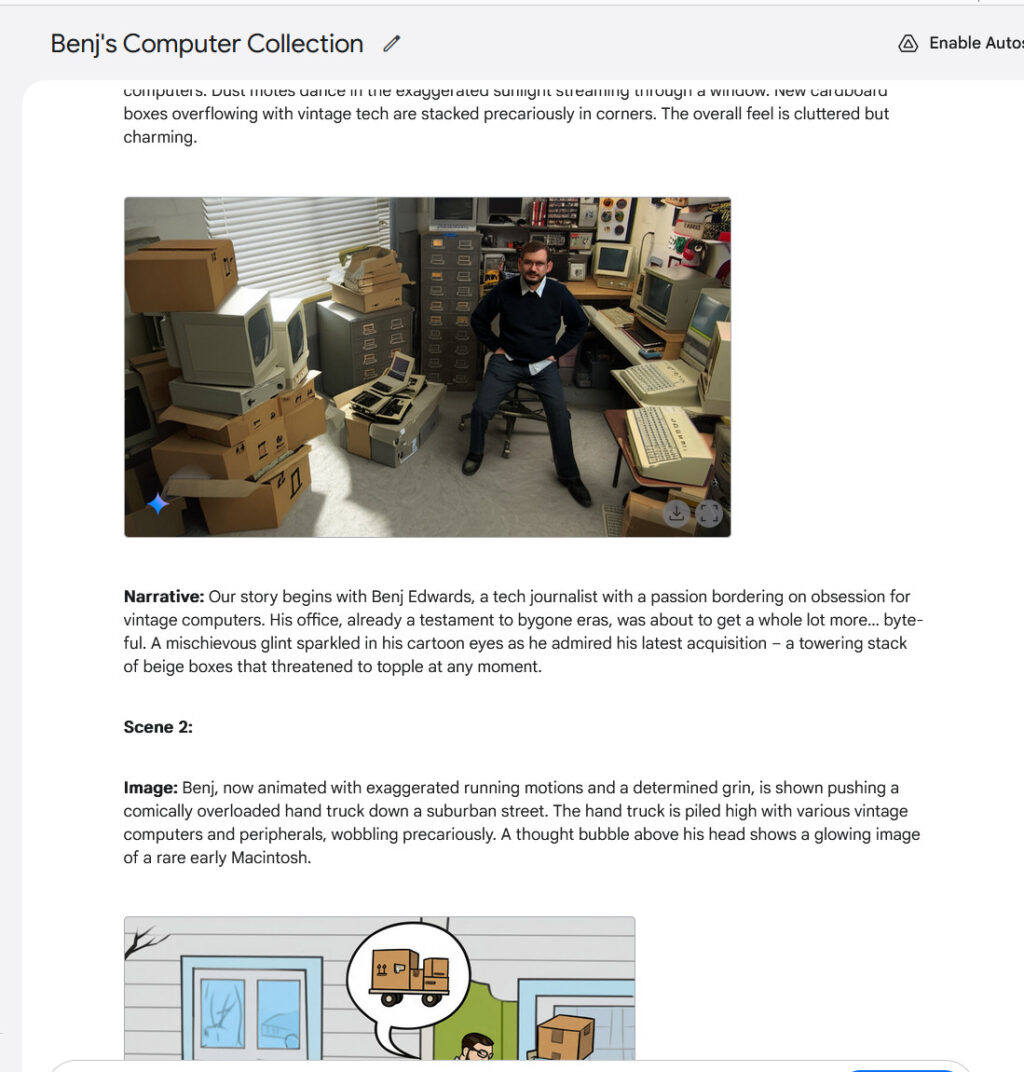

Gemini 2.0 Flash, Making Multi -Image Story with Part 2. See the alternative angle of the original image.

Google / Bennge AdWords

Gemini 2.0 Flash, Making Multi -Image Story with Part 3.

Google / Bennge AdWords

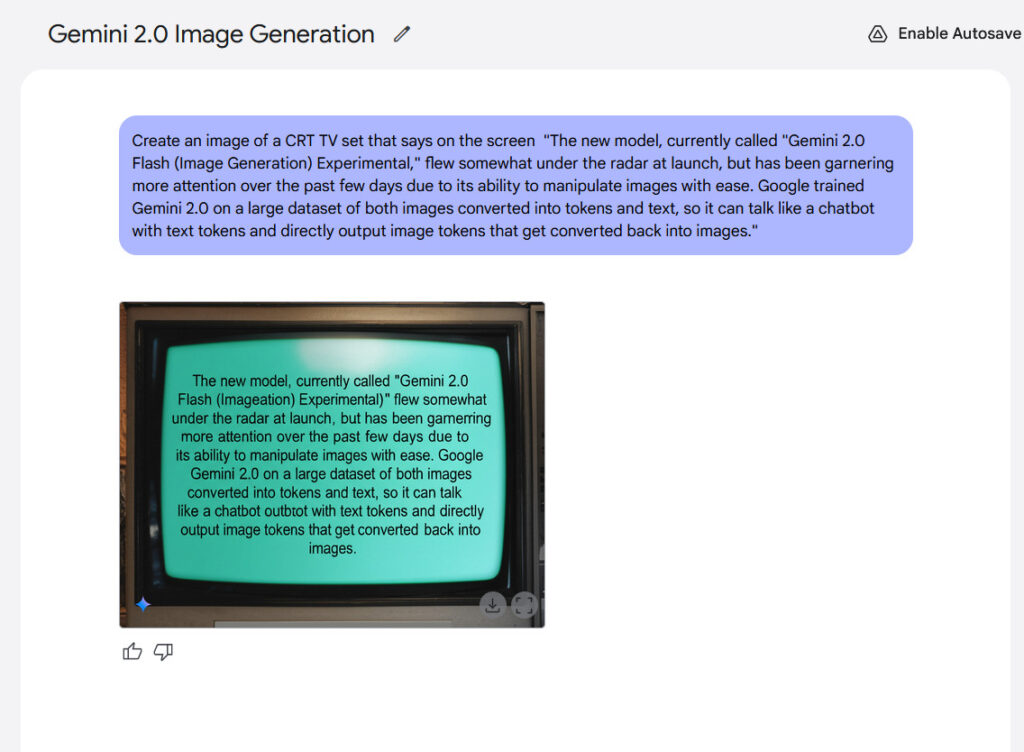

The text represents another potential power of the rendering model. Google claims that the internal benchmark Gemini 2.0 performs better than “well -known competitive models” when producing text -containing images, making it potentially suitable for making content with integrated text. With our experience, the results were not so interesting, but they were capable.

An example of the image text rendering with Gemini 2.0 flash.

Credit: Google / ARS Technica

Despite the still shortcomings of the Gemini 2.0 flash, the appearance of the real multi -modal image output seems to be like a remarkable moment in the history of AI as it is suggested that if this technology is improving. If you imagine the future, it is said 10 years from now, where a complex AI model can produce any kind of media in real time -tacists, photos, audio, video, 3D graphics, 3D printed physical items, and interactive experiments-you have basically one. HuldicBut without a copy of the matter.

In fact, the multi -modal image is still “early days” for the image output, and Google has recognized it. Remember. Flash 2.0 To be a small AI model that is faster and cheap to run, so it has not absorbed the entire breadth of the Internet. In terms of parameter count, all these information is taken a lot of space, and more parameters mean more computers. Instead, Google trained Gemini 2.0 Flash by opening a curse dataset, which included potentially targeted artificial data. As a result, the model “doesn’t know” everything about the world, and Google itself says that the training data is “wide and common, not absolute or complete.”

This is just a fancy way to say that the quality of the icon output is not perfect. But there is a lot of scope for improvement in the future to add more visual “knowledge” because the training techniques are advanced and costing drops in cost. If this process becomes something like we have seen dispensing AI image generators such as stable dispersion, midwife, and flow, the quality of the multi -modal image output can improve in the short term. Fully Ready Ready of Full Media Reality.