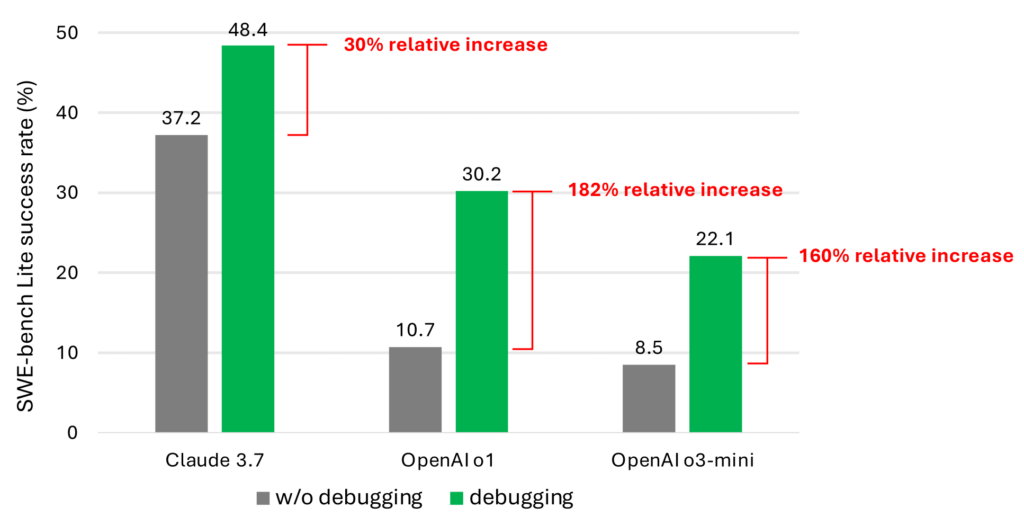

This approach is much more successful than relying on models because they are commonly used, but when your best case is 48.4 % success rate, you are not ready for prime time. Limits are likely because the models do not fully understand how to use the tools, and because their current training data is not in line with the use of this use.

The blog post states that “we believe this is due to lack of data representing the decision -making behavior (eg, debugging trace) in the current LLM training carpus.” “However, significant improvements in performance … confirm that this is a promising direction for research.”

The post claims that this preliminary report is the beginning of efforts. The next step is to fix an information -seeking model that specializes in collecting the information needed to solve the insects. ” If the model is larger, the best step to save the costs of reduction can be “a small information looking model that can provide the relevant information to the elder.”

This is not the first time we have seen the results that suggest that some of the most prominent ideas about AI agents are directly replaced by developers. Already several studies have shown that although an AI tool can sometimes create an application that seems acceptable for the user for a narrow task, models produce codes equipped with insects and security weaknesses, and they are usually unable to fix these issues.

This is the initial step on the path of AI coding agents, but most researchers agree that the best result is that there is an agent who saves a human developer for a lot of time, not what he can do.