Since the AI hype gets stuck on the Internet, tech and business leaders are already looking at the next stage. Refers to a machine with AGI, or artificial general intelligence, Human beings and abilities such as human beings. If today’s AI system is on the way to the AGI, we will need new ways to ensure that such a machine does not work against humanitarian interests.

Unfortunately, we have nothing like Isaac Asimov’s three rules of robotics. Deep Mind researchers are working on this issue and they have issued a New Technical Paper (PDF), which states that a way to safely develop AGI, which you can download at your convenience.

It has a huge amount of detail, rotating on 108 pages Ago References Although some of the AI field believe that AGI is a pipe dream, the authors of the Deep Mind Paper Project that may be up to 2030. In keeping with this, their purpose is to understand the dangers of artificial intelligence like humans, which they acknowledge can cause “severe harm”.

AGI all ways of sucking for humanity

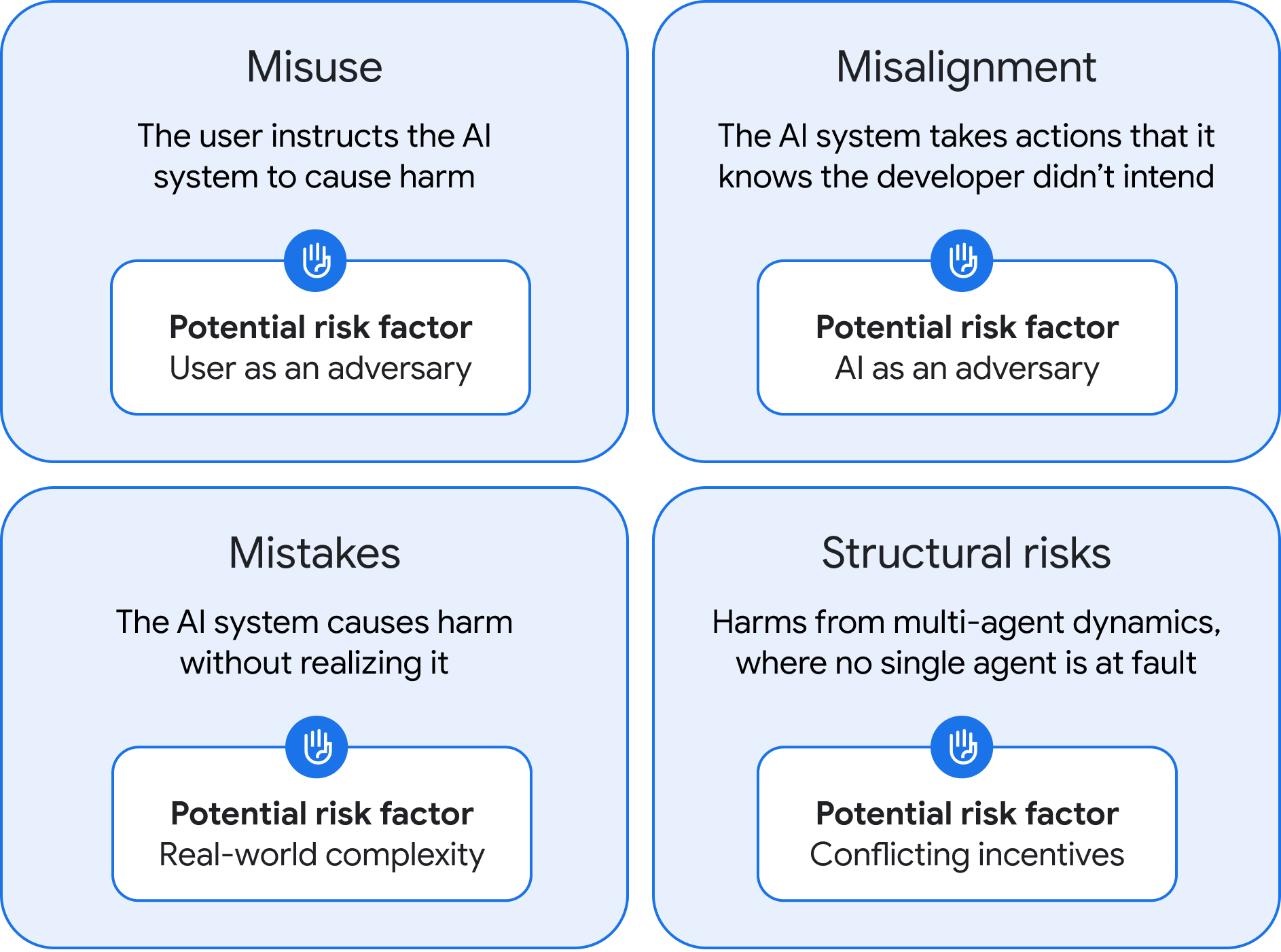

This work has indicated the risk of four potential types of AGI, as well as tips on how we can be at risk. The Deep Mind team, led by the company’s co -founder Shen League, categorized negative AGI results as misunderstanding, misunderstanding, errors and structural risks.

The risk of four types of AGI, as is determined by the Deep Mind.

Credit: Google Deep Mind

The first potential problem, misuse is mainly similar to the current AI risks. However, since the AGI definition will be more powerful, the loss that can be caused by it is much more. With access to AGI, a neuroto can misuse to damage the system, for example, the system can be used as a bio -pune by asking the system to identify zero -day weaknesses and create a designer virus.

Deep Mind says that AGI developing companies will have to test extensively and develop a strong protocol after the post -training. Basically, AI takes care of steroids. They also suggest devising a way to completely suppress dangerous abilities, which is sometimes called “unaware”, but it is unclear if it is possible without limiting models.