The project Mohan looks like a traditional VR or AR headset.

Credit: Ryan Whiteom

Contrast Meta -ray Bans۔ Google Glass presented its UI to the corner of your vision, but Android XR keeps the sunglasses UI in the middle. It is semi -transparent and can be a bit difficult to focus on it first, because in some parts of the information you show is less than the one you meet with Mohan.

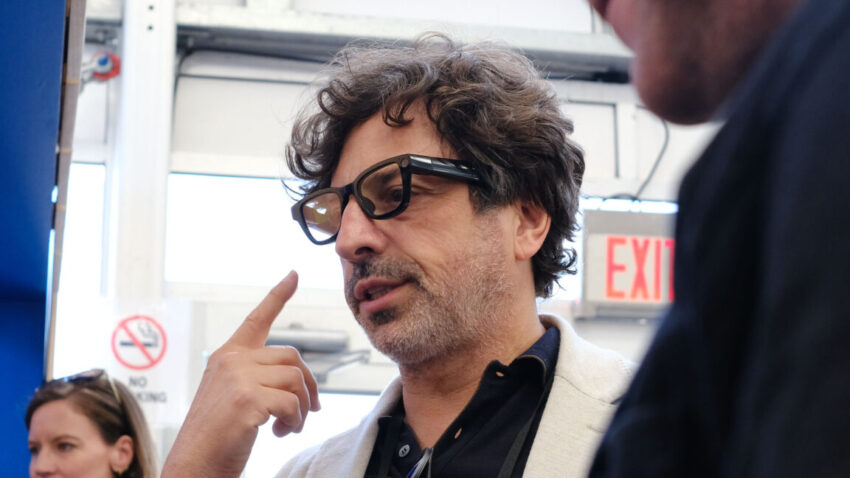

Glasses contain cameras, microphones and open air speakers. Everything is well found in a body, which is barely different from a couple of ordinary springs. The only gesture of “smart” is the complex temple that has silicon and battery. They take advantage of the same Gemini live, wirelessly connected to a phone that you can access through the Gemini app. The experience is different when it comes to your face.

The XR glasses have a touch -sensitive region in the temple, which you can put in long pressure to activate the gym. A faucet stops and chased the AI assistant, which is important because it is a very function. The Demo area for Android XR glasses was a maximum playground that had travel books, art, various tachtaches, and an espresso machine. Since Gemini is looking at everything your work, you can ask him things on the fly. Where was this photo taken? Is this bridge name? How do I make it enough?

From the front, you can barely tell that something about glasses is “smart”.

Credit: Ryan Whiteom

Gemini answers these questions (mostly), when appropriate, the lens shows the text in the display. Glasses also support using a camera to snap photos, which is previewed in the XR interface. This is definitely something you can’t do with the Meta -Ray Baons.

This year’s end, AI for your face

As Google announced in I/O, Now everyone has access Gemini lives on your phone. So you can experience many AI interactions that are possible with prototypes. But Gemini feels different to be ready to share his vision about the world, which gives smooth access to AI features.