Does the size make a difference?

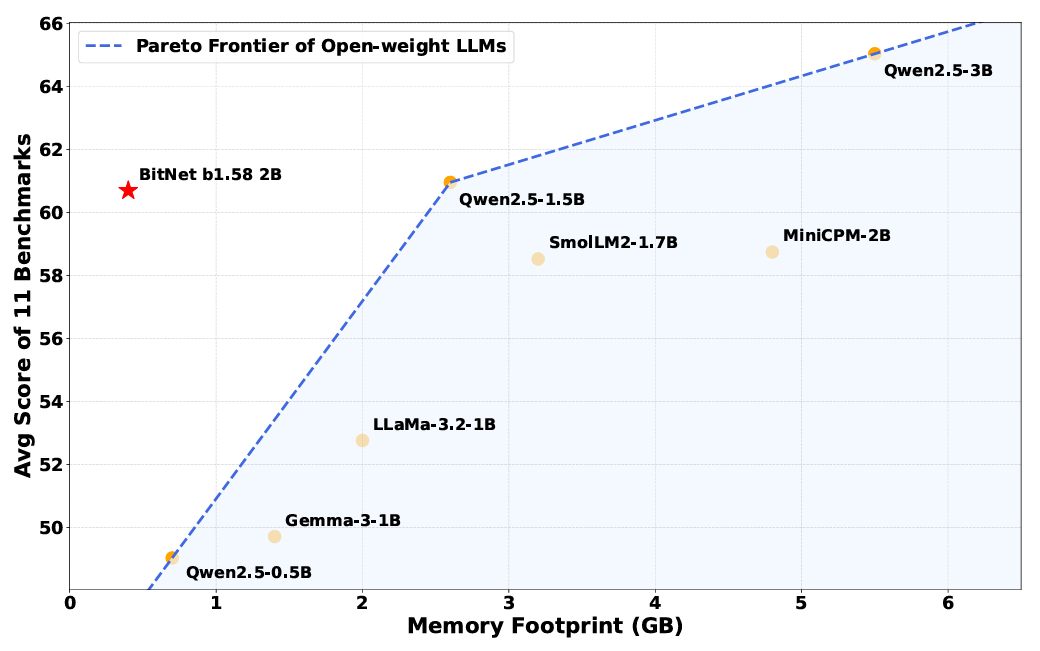

Memory requirements are the most obvious advantage of reducing the complexity of the internal weight of a model. The Bit Net B1.58 model can only run using 0.4GB of memory, compared to 2 to 5GB for another open -weight model of the same parameter.

But the easy weight system also causes more efficient operation at the time of individuality, which relies heavily on internal operations, which relies heavily on easy additional instructions and reduces the instructions for computational expensive blows. Researchers estimate that these performance improvements mean that Bit Net B1.58 uses 85 to 96 percent less energy than similar to similar complete precision models.

An Apple M2CPU is running a Demo of B1.58 at a speeding.

Using An extremely better kernel Especially designed for Butt Net architecture, Bitnet B1.58 Model can run multiple times faster than similar models on standard full precision transformer. This system is quite efficient to reach “human reading speed (5-7 tokens per second), using the same CPU.” Download and run these corrected kernels themselves Try on multiple arms and x86 cpus, or by using This web demo,

Significantly, researchers say that this improvement does not come at the cost of performance, mathematics, and “knowledge” capabilities of various benchmark tests (though this claim cannot be independently confirmed). In accordance with the results of multiple joint standards, researchers found that Bit Net “achieves equal skills in its shaped class, offering better performance in a dramatic way.”

Despite its small memory footprint, Butt Net still performs as a “full precision” weight model on many benchmarks.

Despite the clear success of this “concept” Butt Net model, researchers write that they do not understand why the model works and at the same time with such easy weight. He writes, “Why is 1 -bit training on the scale, deepening its theoretical defects is an open area.” More research is needed to get these bit net models to compete with the overall size and context window “memory” of today’s biggest models.

Nevertheless, this new research shows a potential alternative approach to AI models that are encountered To accelerate hardware and energy costs By running on expensive and powerful GPUs. It is possible that today’s “complete precision” models are like muscle cars that are wasting too much energy and effort when a good subcompact can produce similar results.