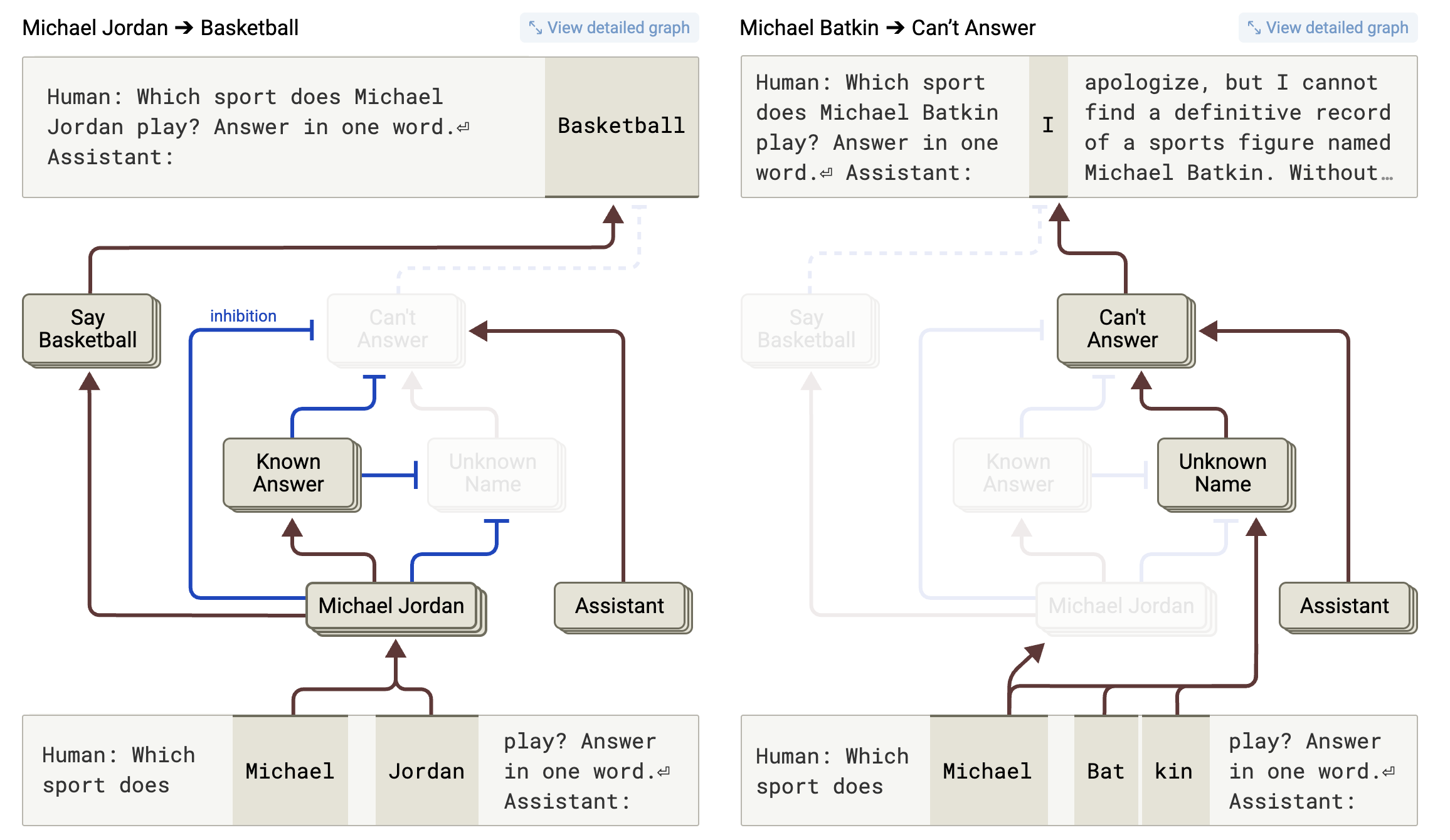

OK toning helps reduce this problem, guides the model to work as a helper, and refuses to complete the hint when the training data is reduced. This fine toning process develops a separate set of synthetic neurons that researchers can see when the cloud is called “known entity” (such as, “Michael Jordan”) or “Unnamed Name” (eg, “Michael Batkin”).

Activating the “unfamiliar name” feature between LLM neurons cannot respond to the model “internal” in the model, “researchers write, and encourages that” I apologize, but I cannot … “In fact, the researchers did not think that the model was answered. The characteristics in its nerve trap shows that it should be.

Researchers write that when the model faces a well -known term like “Michael Jordan”, it occurs, and as a result the “known entity” feature is activated and results in the “answer not” in the circuit “to be inactive or more vibrant,” researchers write. Once this happens, the model can Deep dive in her graph of features related to Michael Jordan To answer this question to the best guessing it like “What game does Michael Jordan play?”

Identity vs. Remember

Anthropic research has shown that artificial weight gain in the “known response” feature can force the neuron to halvaste with confidence in fully -made players like “Michael Batkin”. Such a result attracts researchers to suggest that the “minimum” circuit’s “misunderstanding” of the cloud’s “can not respond” is a situation where the “known entity” feature (or other) is activated even when the token is not well represented in training.