Google says Jema 3 “is the world’s best singular accelerator model.” However, not all versions of the model are ideal for local processing. It comes in different sizes, just a pitte text from just 1 billion parameter models that can run on almost anything in about 27 billion parameter version that eliminates RAM. In low precision methods, the smallest JEMA 3 model can capture less than a gigabyte of memory, but the super-sized version also needs 4-bit precision 20GB-30GB.

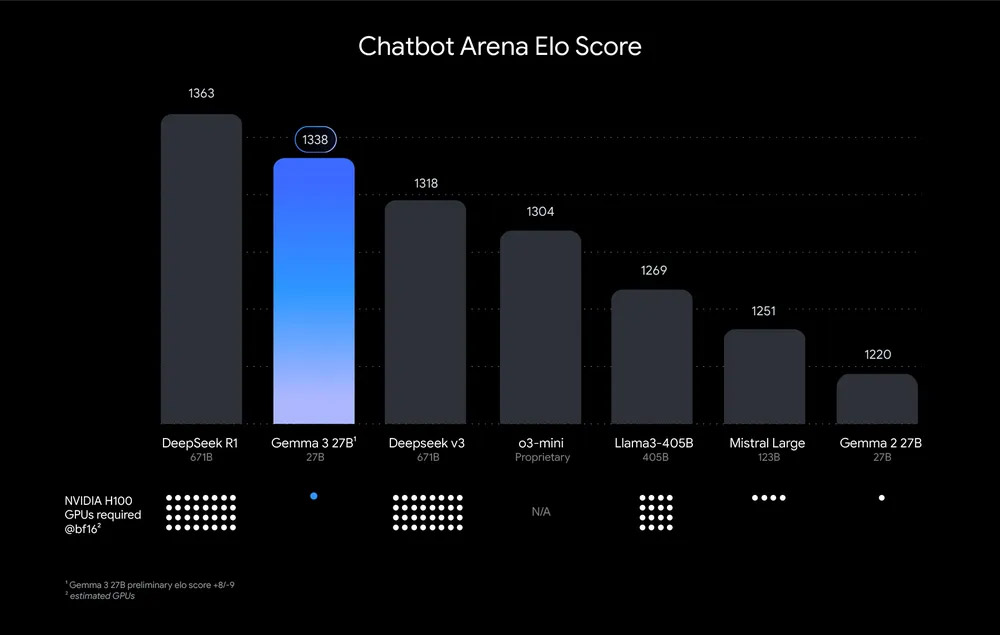

But how good is JEMA 3? Google has provided some data, which seems to be mostly improved in most other open source models. Using alot Matriculation, which measures the user’s preference, JEMA 37B has achieved past success in JEMA 2, Meta Lalama 3, Open and 3-Mini, and other chat capabilities. In this relatively SP property test, it does not catch DPCCR1 to a great extent. However, it runs the same NVIDIA H100 Excelor here, while most other models require a shock of GPUs. Google says Jema 3 is even more capable when it comes to mathematics, coding, and following complex instructions. However, it does not offer a number to back it.

SAPICATE user priority ELO scores people excavates JEMA 3 as a chatboat.

Credit: Google

Google has the latest JEMA model available online at Google AI Studio. You can also fix the model training using tools like Google Kolab and Vertex AI or only use your GPU. There are new JEMA 3 models Open sourceSo that you can download them from the reservoirs Cogl Or The hugs face. However, the Google license contract limits what you can do with them. Regardless, Google will not know what you are looking for on your hardware, which is the benefit of having a more efficient local model like JEMA 3.

It doesn’t matter what you want to do, is a JEMA model that will fit on your hardware. Needs inspiration? Google has a new “Jammer“To highlight applications made with community JEMA models.